TLDR; L2s offer scalability by moving computation and storage off-chain (Plasma). Confronted with censorship resistance concerns around off-chain storage, modern L2s attempt to solve this data availability by publishing the minimum amount required to validate a transfer on the parent chain. But publishing comes at a cost. Optimistic rollups (ORU) delay the cost of validation until after the fact (fraud-proofs) while zero-knowledge rollups (ZKR) pay this cost upfront (validity proof). A multitude of hybrid solutions exists in between. For a dApp, the choice of which L2 to implement should be determined by the nature of the risk it is willing to expose its users to. These types include centralisation risk, game-theoretical risk, technical and implementation risk, and adoption risk.

The concept of a layer-two (L2) solution refers to a secondary framework or protocol that is built on top of an existing blockchain. A permissionless blockchain strives to find the balance between decentralisation, security, and scalability. It is generally acknowledged in decentralised systems research that maintaining all three attributes in one product is challenging. Thus the main goal of scalability research is to solve a part of the distributed systems trilemma (Impossible Triangle).

As we know, to attain decentralisation and immutability, the network participants must reach consensus. In order to do so, each participant must validate each state change. This creates the scalability issue where the throughput of the whole chain is equal to the throughput of a single validator.

Scalability research can be categorised into three parts:

- Increasing the throughput per validator by introducing optimised protocols (e.g., DAG, Hashgraph, Valance, etc).

- Parallel processing to reduce the dependency on validator throughput (e.g., Sharding, where each shard processes its own transactions).

- Moving work off-chain to alleviate transactions-per-second (TPS) constraints while anchoring batched state changes (checkpoints) to the parent chain for security: i.e., L2 solutions.

This third method is defined as follows:

A layer-two protocol allows transactions between users through the exchange of authenticated messages via a medium which is outside of, but tethered to, a layer-one blockchain. Authenticated assertions are submitted to the parent-chain only in cases of a dispute, with the parent chain deciding the outcome of the dispute. Security and non-custodial properties of a layer-two protocol rely on the consensus algorithm of the parent-chain. ¹

Side-chains that run their own consensus protocol and rely on a smart contract bridge to lock and unlock funds between chains are traditionally considered as separate from L2 solutions. However, we will see how this formal distinction no longer holds since L2s are gradually including consensus mechanisms.

Currently there are a myriad of L2 proposals with an uneven distribution of merits. In an effort to distinguish between them, it is useful to recall their origin (I) in order to understand the trade-offs between offering a framework for dApp adoption (II).

The origins of layer-two solutions

The idea of an orthogonal scaling solution - one that doesn't require changes to the underlying protocol - was first introduced in 2016 with the Bitcoin Lightning Network, and the Ethereum community was swift to port the solution with state and payment channels (i). Confronted with their practical limitations, Plasma (ii) was proposed before evolving into rollups (iii), from which the pragmatic approach of the community gave birth to Validium (iv).

Channels (i) englobed a vast range of research, such as state channel, generalised channel network, direct channel network, direct payment network, payment channel hub, etc. The common idea is to use a smart contract (SC) as an escrow service between two participants, allowing them to make multiple small transactions between themselves, off-chain, before providing the final compensation balance to the contract in order to settle their channel. Unfortunately, these solutions all suffer from the impossibility of open participation as well as channel application specificity. Though efforts have been made to address these limitations through the use of hops and hubs, channels have gradually been abandoned in favor of more generalised scalability solutions.

In the quest of a more generally-purposed scalability solution, Buterin & Poon proposed Plasma (ii) in 2017. Plasma would batch off-chain transactions into a Merkle root and publish the root to a base chain smart contract at regular intervals. A user would lock assets in the smart contract, interact on the plasma chain, and withdraw on the base chain by submitting a Merkle proof of their transactions. This would open a challenge period in which anyone "can try to use other Merkle branches to invalidate the exit by proving that either (i) the sender did not own the asset at the time they sent it, or (ii) they sent the asset to someone else at some later point in time" ¹. Limiting base-chain transaction history to a Merkle root provides data scalability while limiting calculations to exit challenges (executed off-chain) provides computational scalability. But researchers quickly identified game theory issues surrounding data availability, and though some teams attempted to alleviate them through the use of an additional consensus layer (e.g., StarkEx DAC), this path has been abandoned.

Nevertheless, the core idea of batching was preserved, as with the advances in zero-knowledge cryptography came the rise of rollups (iii). Building upon the idea of off-chain computation, rollups combine multiple L2 transactions into a batch (i.e., rollup) and attempt to address Plasma's data-availability issue by publishing a compressed version of transfer data on the parent chain. Validation then relies on either generating a zero-knowledge proof upfront (i.e., validity proof) for each transaction, or taking an "optimistic" approach that considers each transaction valid by default until someone challenges the assumption (i.e., fraud-proofs).

Matters Labs offers an excellent definition for rollups. It is “a layer-two scaling solution similar to Plasma: a single mainchain contract holds all funds as well as a succinct cryptographic commitment to a larger “sidechain” state (usually a Merkle tree of accounts, balances, and their states). The sidechain state is maintained by users and operators offchain, without reliance on L1 storage (which is the source of the biggest scalability win).” They stand apart from Plasma by publishing a succinct record of each transaction on the basechain. By publishing a bundle of records via tx CALLDATA (which is order of magnitudes cheaper than layer-one storage), they solve Plasma’s data availability problem since everyone is then able to access and verify the validity of the transactions. This approach comes in two flavors.

Proposed by Barry Whitehat in late 2018, the concept of zero-knowledge proofs (ZKPs) was first presented under the name of Plasma Ignis. By generating a SNARK proof for each batch, you could rely on its cryptographic guarantees to reduce the size of the data published on the parent chain and allow users to withdraw immediately on L1 (full finality). This solution has become known as zkRollups (ZKR).

Shortly afterwards, John Adler proposed Minimal Viable Merged Consensus, which has become known as an optimistic rollups (ORU). By considering L2 operators as honest participants, transaction throughput would only be limited by the maximum size of an L1 transfer. It then relies on game-theoretic assumptions to prevent fraud. A user’s request to withdraw funds on L1 would open a challenge period (estimated 1 to 2 weeks) where anyone is able to verify the validity of the previous state changes. If an error is found, the bond of the L2 operator who submitted the state change would be slashed.

Finally, a hybrid solution coined as Validium applies validity proofs to Plasma off-chain storage and claims to reach an order of magnitude higher TPS.

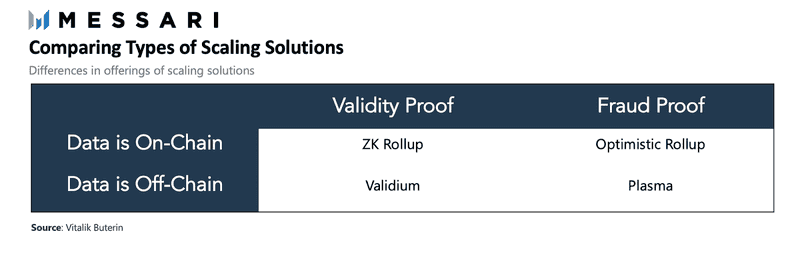

These different L2 solutions can be summarised by a 2x2 matrix.

When choosing an L2 solution, dApps should be aware of each option’s pros and cons.

A framework for dApp adoption

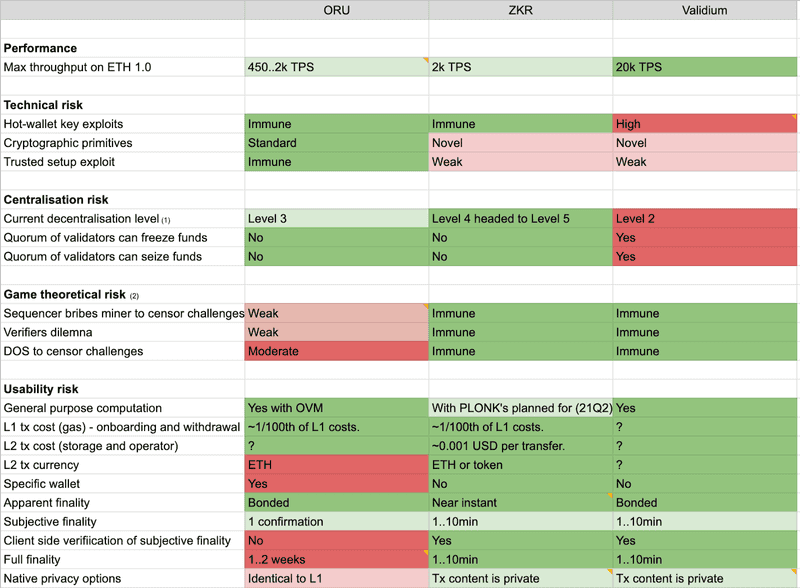

The trade-offs between each solution require the dApp to evaluate which risks it is willing to carry. The risks may be divided between:

- Technical and implementation risk. Does the protocol use novel or battle-tested cryptographic primitives? Is the team capable of delivering on their roadmap? Does the team have the correct operational security to avoid their source code from being compromised?

- Centralisation risk. What is the level of decentralisation and censorship resistance of the protocol? Does it require trusting a centralised entity? Can it withstand a regulatory injunction?

- Game theoretical risk. Does the protocol rely on game theory incentives? Are there known exploits?

- Usability risk. How does the user interact with the protocol? When can the user consider a transfer final? What are the costs for a transaction?

- Decentralisation levels https://zksync.io/faq/decentralization.html i. Centralised custody (fully trusted): Coinbase ii. Collective custody (trust in the honest majority): sidechains iii. Non-custodial via fraud-proofs (trust in the honest minority): optimistic rollups iv. Multi-operator (trustless, weak censorship-resistance): Cosmos v. Peer-to-peer (trustless, strong censorship-resistance): Ethereum

- For a review of challenges and their corresponding rebuttal: https://research.paradigm.xyz/rollups

All three solutions claim to offer general-purpose computation which would allow creating smart contracts on L2. Indeed, though it is often claimed that full-EVM compatibility is reserved for ORU's, the use of recursive ZKPs (aka. PLONKS) will allow ZKRs to also run smart contracts. Aztec and zkSync intend to release in the second quarter of 2021. The estimated TPS between ORU and ZKR are close to identical. Validium offers a x10 TPS increase but at the cost of higher centralisation.

Since ORU's rely on standard cryptographic primitives, they carry less technical risk, but since their security model relies on delayed transaction finality, a user waiting several days to withdraw funds on L1 reduces usability and exposes the protocol to known malicious game exploits. Conversely ZKR's provide swift full-finality for the user and avoid this class of exploits altogether.

Each solution has multiple implementations from competing teams.

- Optimistic rollups are defended by the likes of ‣, Arbitrum, Fuel Network, and Cartesi.

- Zk-rollups by ‣, Loopring, Aztec, and Hermez.

- Validium by ‣ and zkPorter by Matter Labs.

(A comparison of different implementations is currently a work in progress.)

The choice of L2 depends on the dApp’s requirements. A high-frequency trading company may accept the centralisation risk of Validium. A gaming company may accept the game-theoretical risks of ORU, while a decentralised crypto payments solution would opt for the guarantees of ZKRs.

Further reading

- Layer 2 primer: https://ethereum.org/en/developers/docs/layer-2-scaling/#zk-rollups

- Messari L2: Messari - Ecosystem of Ethereum Scaling Solutions _ Messari.pdf

- Vitalik's guide to rollups: https://vitalik.ca/general/2021/01/05/rollup.html

- SoK: Layer-Two Blockchain Protocols: https://eprint.iacr.org/2019/360.pdf

- zkRollup vs Validium: https://medium.com/matter-labs/zkrollup-vs-validium-starkex-5614e38bc263

- Comparison framework by Matter Labs: https://medium.com/matter-labs/evaluating-ethereum-l2-scaling-solutions-a-comparison-framework-b6b2f410f955

- Comparison framework by Kyber: https://blog.kyber.network/research-trade-offs-in-rollup-solutions-a1084d2b444

- Ethereum L2 research: https://ethresear.ch/c/layer-2/32

- TariLabs: https://tlu.tarilabs.com/scaling/layer2scaling-landscape/layer2scaling-survey.html

- History of Plasma: https://media.consensys.net/the-state-of-ethereum-layer-2-protocol-development-2-f22b2603abd6 - mass exit problem

- State of optimistic rollups: https://medium.com/molochdao/the-state-of-optimistic-rollup-8ade537a2d0f

- ORU projects: https://docs.google.com/spreadsheets/d/1NqoMIDQk2gjjzm5Mleu9DIYudZbhVzXceC9lODt5VGs/edit#gid=0

- Optimistic game semantics: https://plasma.group/optimistic-game-semantics.pdf

- Awesome zkp: https://github.com/matter-labs/awesome-zero-knowledge-proofs

- The issue with channels: https://medium.com/fairlayer/why-lightning-and-raiden-networks-will-not-work-d1880e4bc294